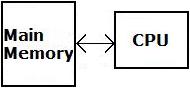

In a computer's normal operation, program code and data are transfered from some form of physical storage, e.g. hard disk, into its main memory (random access memory or RAM) where the its processor (central processing unit or CPU) can access it quickly. In the first generation of personal computers, the processor interfaced directly with memory through memory control circuitry, as shown below.

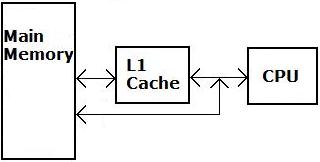

It was soon recognized that after the processor accessed a location in memory, there was a high probability that the next location in memory that it would access was in the same area (called a "page") of memory. So in order to make the computer run faster, a a smaller, faster memory (called a "cache", pronounced cash) was placed between main memory and the processor. When the processor accessed a location in memory, a copy of the entire page containing that location was loaded into the cache.

When the processor needs to access a location in main memory, it first checks whether a copy of that data is in the cache. If so, the processor accesses the cache, which is faster than accessing main memory. One reason cache memory faster is because it's implemented with a static RAM (SRAM) chip, while the main RAM is usually a dynamic RAM (DRAM) chip.

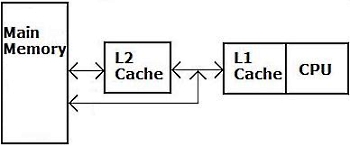

It was soon recognized that a computer could work even faster if the cache itself had a cache. In 486DX computers, the first cache (referred to as the "level 1" or L1 cache) was built into the CPU itself. Another cache (the L2 cache) was implemented as a separate chip on the motherboard. The L1 cache is smaller, up to 128 kB, compared to the L2 cache which can be up to 256 kB.

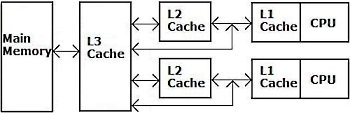

The Pentium Pro was the first processor to have both the L1 and L2 caches built into the CPU. Multi-core processors, in addition to having L1 and L2 caches built into each core, have an L3 cache which all the processors share. The L3 cache is implemented as a separate chip on the motherboard.

CPU caches are not the only ones in a computer, for example modern hard disks use a RAM cache that contains the data most recently accessed on the hard disk. Basically, a modern computer is laced with all kinds of buffers and caches in an effort to make them work faster.

"Latency" refers to the delay incurred when a computer tries to access data in memory. Do all these caches really reduce latency? Well, cache controllers utilize complicated schemes developed by statistical studies and thousands of performance tests, called "benchmarks" have been performed to prove that they do.

More Computer Architecture Articles:

• Digital to Analog Convertion with a Microcontroller

• Analog to Digital Convertion with a Microcontroller

• Microprocessor Counter, Clock, Timer Circuits

• Program Flow Charting

• Operating System Process Management

• Logical Versus Physical Memory Addresses

• Computer Video Display

• The AMD Athlon 64 X2 Processor

• Multi-Processor Scheduling

• Learn Assembly Language Programming on Raspberry Pi 400